What is a custom robots.txt file ? How to implement it in blog

Hello friends, what is Robots.txt File? How to add it to the blog? how does it work? Why is it necessary to put it in the blog? What are its advantages? How to create a robots.txt file? If you are a blogger then you must also have such questions related to robots.txt. So today we will learn about robots.txt in full detail in this article.

|

| What is a custom robots.txt file? How to implement it in blog |

If you have been blogging for a long time then you will know about robots.txt. But many times an error occurs while making it. So, today, in this post, we learn to make it properly and add it to our blog. Apart from this, if you are a new blogger, after reading this article, you will get complete information about robots.txt.

What is robots.txt:

Robots.txt is a file that we need to add to our blog. As you all know that .txt is an extension of text file format. In this way, robots.txt is a text file. Robots.txt can be called the bodyguard or security guard of our blog. Which does not allow any search engine to access the secure pages of our blog without permission.

|

| What is a custom robots.txt file? How to implement it in blog |

Suppose you do not want to display some pages of your blog in search engines or index the categories, labels or tags of blogs, in search engines. So you can write all these inside the robots.txt file in the disallow section. Let us now know how robots.txt works.

You can also read:-

>>How to increase blog traffic, 60 popular ways to increase blog traffic..

>>Top 5 Best Free Tools for Blogger ( Chrome Extension )

How does robots.txt work:

As I told you a little while ago, robots.txt can be called the bodyguard or security guard of our blog. You protect the privacy of our site in this way. When the search engine enters your blog to rank your blog, robots.txt tells it what to rank, and what not to rank. This makes it easy for search engines to rank the content of your blog. And this way your blog becomes SEO friendly.

Advantages of Robots.txt File:

We must add robots.txt file to our blog. This is a must for our site. This makes our blog SEO friendly. And there are many advantages to applying it to your blog.

- With the help of robots.txt, you can keep any content of your blog private from the search engine.

- You can also keep your "sensitive information" private by using it.

- You can also help Google bots through robots.txt. To rank / index the content of the blog.

- With the help of robots.txt, the search engine can easily find out where the sitemap of your blog is located.

- Through this, you can categorize your blog's categories, labels, tags, images, documents, etc. Can prevent it from being ranked / index in search engines.

- Through this, you can index the content of your blog in search engines. And the content that you want can be kept away from the eyes of the search engine.

How to create robots.txt:

By the way, many websites are available on the Internet in the name of robots.txt file generator. Which generates a robots.txt file for your site. But I would advise you not to visit any such website. It stores all your dataWhich may cause us trouble in future. So stay away from all these websites. Here I am telling you the absolutely genuine and right way. Which Google Blogger has told you can go to Blogger's help forum and see it.

Read also:-

>> Top 6 Best Blogger and WordPress Theme

>>Blogger vs WordPress? Which is the best platform for blogging

What is User- Agent:

This is the name of the robot for which you are writing the command. Such as: - Google Bot, Google Bot-Image, AdsBot Google, Google Bot-Mobile, Mediapartners-Google, Etc. You will write the name of the robot for which you are writing the code in the User-Agent.These commands are for all users. Through this, the search engine will see what data of your site and which data will not be able to see it, you can do it from here.

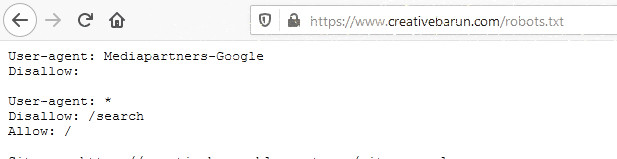

User-agent:Mediapartner-Google:

Mediapartner-Google is a Google AdSense Robot. We write this command inside the User-Agent when we are using AdSense on our site. If you use AdSense code in your blog or website, you can write Mediapartner-Google in your Robots.txt.With this, we can hide our AdSense code from Search Engine. And if you don't use AdSense, these are not necessary for you.

User-Agent Sign: *

We use the * sign inside the User-Agent when we have to give the same Instruction to all Search Engine Robots. This means that you are giving the same command to all search engines.Allow Command:

We write the data of our site in the search engine, inside Allow, we write it. Which search engines can access all the data of your site, you can allow it from here. This allows Search Engine Bots to Crawl and Index your content.Disallow-Command:

Through the Disallow Command, we can stop the Search Engine Robot from accessing whatever we want from our site. The data that we do not want to index in Search Engine, we can write it inside Disallow. In this, we have Unwanted Pages, Unwanted Post, Categories, Labels, Tags, Etc. Can write links.Sitemap Command:

From here you can tell Search Engine the path of your site's Sitemap. Which makes it easier for search engines to rank and index your content. You must know about Sitemap. All the data on our site is linked here. What if you are a Sitemap? How to create a sitemap? Want to know So you can read that article.How to check robots.txt file:

Now the question arises that how to check whether the Robots.txt File is in the website? So these are very easy. You can easily check the Robots.txt file on your website. If you do not know if there is a robots.txt file on your site, then for that you will write a link to your site in the URL Bar of your web browser, and search by typing /robots.txt in the last. Then you will know whether this file is on your site or not. |

| What is a custom robots.txt file? How to implement it in blog |

Apart from this, you can check Robots.txt by going to Google Search Console, whether it is on your site or not. For that you will have to log in to Google Search Console. After that, we will click on Crawl >> Robots.txt Tester.

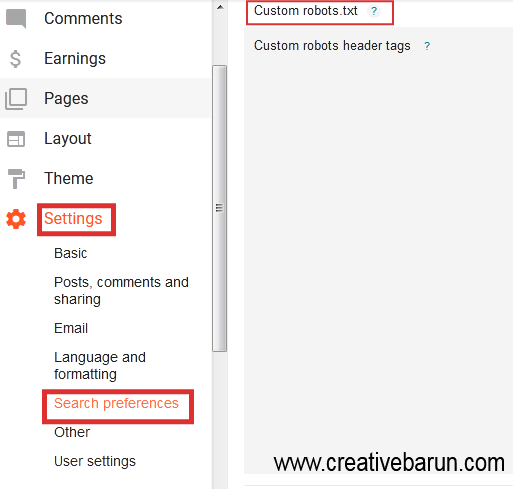

1)Go to Blogger.com first.

2) Now go to Settings.

3) Then go to Search Preferences.

4) Now click on Edit of Custom Robots.txt.

|

| What is a custom robots.txt file? How to implement it in blog |

5) Tick "Yes" after clicking Edit.

6) As soon as ticking Yes, a Text Editor Box will appear in front of you. In which you have to copy the above code and paste it here.

|

| What is a custom robots.txt file? How to implement it in blog |

7) On the Sitemap, you have to write a link to the Sitemap of your blog.

8) After that click on Save Changes and save this settings.

How to Add Robots.txt File to Blog:

So in this way you can add Robots.txt File to your blog. You can also change the robots.txt code according to your site. Such as: - If you do not use AdSense, do not write Mediapartner-Google in the code. If you also upload images and videos, then write Googlebot-Image and Googlebot-Video in code as well. In this way, you can make changes in the code according to your site.

Why robots.txt is important for our sites:

Having a robots.txt file present on the website helps the search engine to access the sitemap of the website. This makes it easy for search engine bots to know what to rank in the website, and what not to do. When search engine bots come to a website or blog, they crawl the content of the website according to the instruction given in the robots.txt file.

At the same time, if the search engine bots will not find the robots.txt file in the website, then the search engine bots will get confused about which content of the website they have to crawl. In this case the search engine bots start crawling all the contents of the website or blog.

In such a situation, there are some private data on our website which we do not want to index. But because the robots.txt file is not present on the website, search engine bots start crawl and index all the data of our site. Therefore we must definitely add robots.txt file on our website. All these are very important for website and blog.

Conclusion:-

So friends, now you must have known everything about Robots.txt. What is robots.txt? How does it work How to add to blog? Why should I blog? What are its benefits? .I hope you have liked this post. And you have understood all the things I have said. I tried to explain in a very simple way.If you still have any question, you can ask us by commenting. If you liked the post, please share this post with all your friends on social media. You can click here to read related posts from Blogger blog.Thank you.

![How to set custom robots header tags in Blogger [Blogspot] How to set custom robots header tags in Blogger [Blogspot]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgdSZX8tZo-YbnncgkcOhrms1Alue_S0znHusSaZjC1rsEiBr9LiIv2FwKUhkNF2zFtTRw6XjlViMZ18ry6thU0hbCQHE-XZq1dkA9N6XZUsIOyM23W7kGiYrlmYITE6NhRysJ5Hv_Tlk8G/s72-c/How+to+set+custom+robots+header+tags+in+blogger+%2528blogspot%2529+blog4.jpg)

No comments: